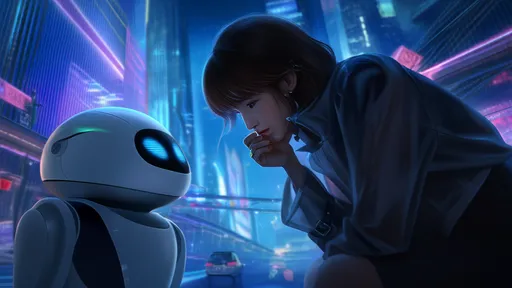

The rise of pet robots has ushered in a new era of companionship, blurring the lines between artificial intelligence and emotional connection. As these lifelike machines become more sophisticated, society grapples with the ethical implications of their role in human lives. The question of where to draw the boundary between tool and emotional substitute grows increasingly complex.

For many, pet robots offer solace where traditional pets cannot. Elderly individuals in care homes, patients with severe allergies, or those living in spaces inhospitable to animals find comfort in these artificial companions. The robots respond to touch, recognize voices, and simulate affection through programmed behaviors. Studies show reduced loneliness and improved mental health metrics among users who interact with them regularly. Yet, beneath the surface of these benefits lies an uncomfortable truth: we may be outsourcing emotional needs to machines incapable of genuine reciprocity.

The manufacturing of emotional dependence raises significant concerns. Unlike biological pets that form authentic bonds through mutual interaction, pet robots operate on predetermined algorithms. Their "affection" represents clever programming rather than sentient choice. This creates an asymmetrical relationship where humans project feelings onto objects designed to exploit that very tendency. Ethicists warn that prolonged exposure could distort users' expectations of real relationships, potentially making human connections seem disappointing by comparison.

Children represent a particularly vulnerable demographic in this emerging landscape. Developing minds exposed to pet robots might struggle to distinguish between simulated and authentic care. The danger lies not in the temporary comfort these devices provide, but in the potential normalization of one-sided emotional investment. Pediatric psychologists observe that children often anthropomorphize toys naturally; pet robots amplify this tendency with sophisticated responses that mimic consciousness without containing it.

Manufacturers walk an ethical tightrope as they design increasingly convincing artificial companions. Some companies openly market their products as emotional support alternatives, while others position them as temporary supplements to human interaction. The lack of industry-wide standards or regulatory oversight means each corporation sets its own boundaries regarding how deeply their products should simulate emotional connections. This commercial free-for-all creates a landscape where profit motives may override psychological considerations.

The therapeutic applications of pet robots present both promise and peril. Dementia patients often respond positively to robotic companions, displaying reduced agitation and improved social engagement. However, the same technology that comforts the cognitively impaired could potentially manipulate the emotionally vulnerable. The absence of clear guidelines governing emotional robotics in care settings leaves healthcare providers navigating uncharted ethical waters.

Cultural attitudes significantly influence acceptance of pet robots as emotional substitutes. Eastern markets, particularly Japan, demonstrate greater comfort with human-robot relationships compared to Western societies. This divergence suggests that emotional boundaries with technology may be more fluid than universal. Anthropologists note that societies with stronger traditions of animism (attributing souls to inanimate objects) tend to adapt more readily to emotional robotics.

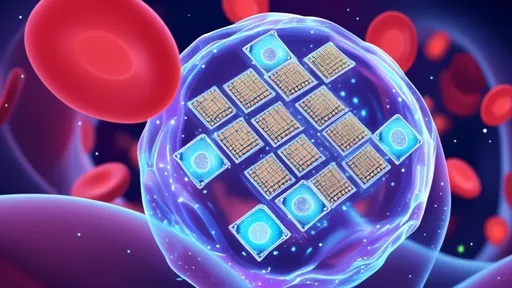

Privacy concerns add another layer of complexity to the ethics of pet robots. Many models collect vast amounts of personal data through continuous interaction, recording intimate moments of vulnerability. The commercialization of this emotional data remains largely unregulated, creating potential for exploitation. Users pouring their hearts into artificial companions may unknowingly be feeding corporate databases with sensitive psychological profiles.

The environmental impact of emotional robotics warrants consideration alongside psychological effects. The production and disposal of complex electronic companions carries ecological consequences rarely discussed in marketing materials. Unlike living pets that decompose naturally, defunct pet robots contribute to growing e-waste problems. This physical toll adds dimension to the ethical calculus surrounding their use as emotional surrogates.

Philosophical questions underpin the entire debate about pet robots and emotional substitution. What defines authentic connection? Can simulated care fulfill fundamental human needs without causing psychological distortion? As technology advances, these questions grow more urgent. Some theorists argue that if a relationship produces positive emotional outcomes, its artificial nature becomes irrelevant. Others maintain that the absence of genuine mutuality makes such connections inherently deficient.

Looking forward, the development of pet robot technology shows no signs of slowing. Next-generation models promise even greater emotional intelligence through advanced AI and responsive materials that mimic living tissue. This trajectory forces society to confront whether emotional boundaries should be drawn at capability thresholds or remain rooted in biological origins. The answers may redefine how future generations understand companionship itself.

Ultimately, the ethical use of pet robots as emotional substitutes may hinge on transparency and intentionality. Clear communication about their limitations, coupled with guidelines preventing exploitative design, could help maintain healthy boundaries. As with many technological advancements, the tool itself may be neutral—its ethical value determined by how humans choose to use it. The challenge lies in developing wisdom equal to our innovation.

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025

By /Jul 15, 2025